I built a log-monitoring tool called Easylogs. This is the story of how and why.

At the start of this year, I decided to course-correct. After wrapping up my previous startup and spending a year working at an amazing one, something still felt missing. I needed to get back to building.

But for months, I couldn’t latch on to an idea. I kept hopping between them, occasionally coasting. Nothing felt inspiring enough. And ideas, the good ones, have a will of their own. If they don’t excite you, building them starts to feel like a chase. I wanted to avoid that. (Of course, there’s nothing wrong with pursuing something because it’s a great business opportunity, but that’s just not me.)

Somewhere in the middle of that search, I stumbled into building a log-monitoring tool. A happy accident, if you will. At my last job, I had done some research into self-hosting a log-tracing tool. Along the way, I ended up learning a lot about the space: the tech stack, the pain points with existing products, the major players. The complexity around this product interested me, and I thought I should explore this further. I have often wondered what it would take to build Datadog alternative or an AWS one.

I was still between minds if I wanted to pursue this idea. Log-monitoring is, to an extent, a solved problem. Between Datadog and a bunch of opensource tools, there’s usually some solution depending on your budget and willingness to compromise on features. Also, it’s a complex product to build. Too many moving parts. But I needed something to keep me busy while I explored ideas I would actually want to spend the next 10 years on.

Pre-LLM, I wouldn’t have touched this idea without a team and a decent amount of funding. But with AI coding tools, I thought maybe I do something here. So I started building.

My relationship with Easylogs has been on and off. I’d work on it for two weeks, then not touch it for one, then come back. I was building whenever inspiration struck. This rhythm was new for me and it very much against the conventional idea of building products/startups, but I started enjoying it. And maybe this is how I’ll build things going forward.

A word on the complexity

Log-tracing is hard. There are a bunch of systems you need in place:

- A method to capture high-throughput data with zero data loss. These logs are mission critical, so you don’t want to loose them in transit and and they can flood in fast.

- A search engine database that stores all this efficiently without exploding your costs.

- High availability. So say your server crashes, you have work-arounds and you don’t lose visibility.

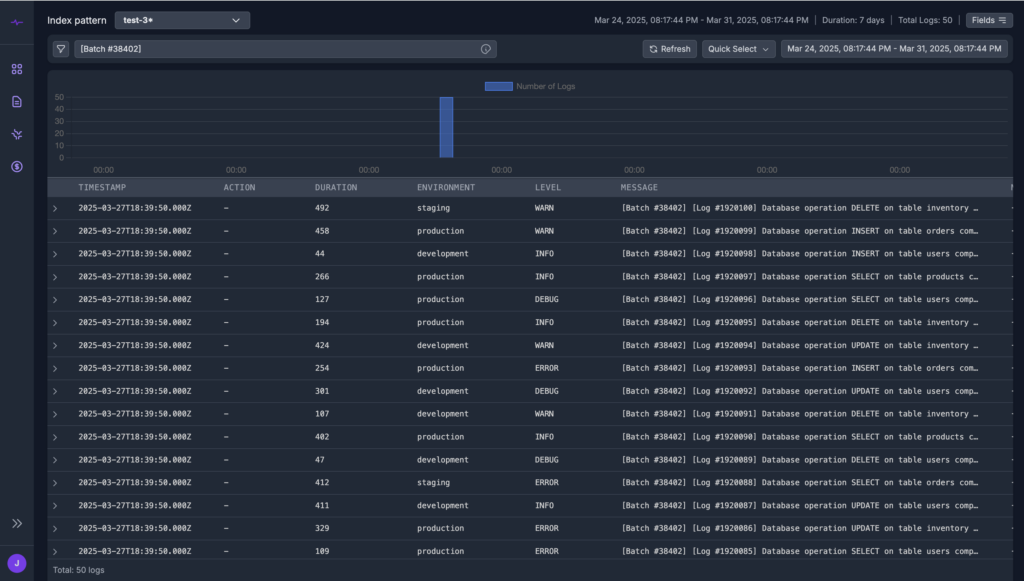

- A UI good enough to show the complex trends, anomalies, and charts.

- The usual SaaS scaffolding: auth, payments, user management, admin console, etc.

And weirdly, it was the complexity that drew me in. Not the problem itself. I know that’s not how you’re supposed to build startups, but honestly, who cares. If it’s fun, you keep going.

So I started from scratch. No managed services. Just raw VM clusters. Because I also wanted to learn.

The Stack

Started with a single-node OpenSearch instance. Then moved to a cluster. Learned all about its internals, Docker vs dedicated instance setups, quirks of the system.

Then came the application part. Initially I thought I’d just fork OpenSearch Dashboards and customize it. The codebase was open-source but… I found it too complex. Too much going on. So I began rewriting things from scratch.

For the backend, I picked Python Django. Since I’m the only one working on this, I wanted something stable. Django handles auth, ORM, REST APIs, CORS, CSRF, templating, emails, pretty much everything out of the box. And it forces you to organize your code, which makes coming back to it weeks later less painful. I can’t express how much I’ve come to appreciate Django. Sure, it might not be the most performant language, but I am not expecting a million users per day either? Compute is cheap. Human time isn’t. And so if I need to, I could just scale horizontally.

For the frontend, I stuck to vanilla JS. Might move to React later, but for now, it does the job. Especially with AI writing a lot of the frontend, I thought maybe vanilla.js might be good enough. I might make a move to React sooner though.

Database is the good old Postgres. Django hides it away nicely with its ORM, and it just works.

The Hardest Part: Logs Ingestor

Ironically, I thought this would be the easiest bit. I planned to use off the shelf tools like Timber.io’s Vector (also used by Datadog) or Fluentd. But none of them gave me the control I wanted. I needed a secure ingestion layer and the option to live-stream logs in the future. I couldn’t get any of the tools to behave the way I needed.

My target was to be able to handle 1 million logs per minute. At least for now.

So I ended up building the ingestion pipeline myself. It was a challenging call, but turned out to be the most fun part of the entire project. For the first time I ventured into Golang. When you want performance, you pick either Rust or Go. Rust is brilliant, but heavy. Go reminded me of C from university days. So I went with Go and I saw many high performant systems were indeed built with Golang.

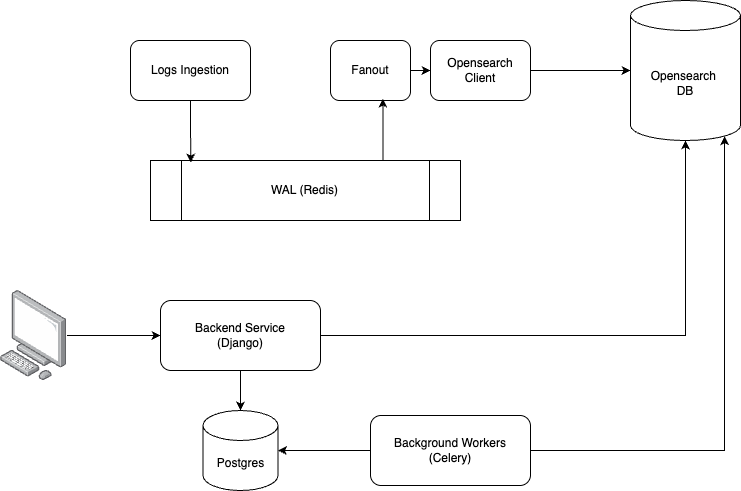

Here’s how I designed the log ingestor module:

- Auth Layer with Caching: Every request is authenticated. Then cached with a short TTL, so repeat requests skip expensive checks. Saves CPU cycles.

- Write-Ahead Logging (WAL): Every log is first written to WAL (currently using Redis with persistence). I have the option to move to Kafka if the load increases at some point in future. .

- WAL Processor: Pulls data from Redis, buffers it in memory.

- OpenSearch Client: Picks up buffered data and makes bulk insert calls to Opensearch DB.

The whole system is horizontally scalable. I can swap Redis with Kafka down the line, or add new sinks for features like live streaming.

Wrapping It Up

The whole thing came together two weeks ago. I spent the week after that messing around with the landing page. Honestly, I was procrastinating. Or more accurately, I was afraid of announcing the project and being judged.

But here we are. Also guess the landing page is decent looking. You be the judge.

I wouldn’t have been able to build something this complex without Claude, Cursor, and OpenAI. Seriously, LLMs short-circuited so much of the heavy lifting.

Try out Easylogs. I’m working on adding AI-powered insights and more docs so you can plug it into your existing stack/platforms and extract value fast.

One last thing I learned: it’s good to cycle between projects. Otherwise, you start overbuilding, adding useless features just to stay busy. You can approach work like an artist, that is work when inspiration strikes. And whenever that happened, things that used to take weeks now take days.